The 1.5 Sigma Shift in Six Sigma

The 1.5 Sigma Shift in Six Sigma

Six Sigma is one of the most widely respected business process improvement methodologies available. It comes with a wealth of statistical tools to help businesses understand what aspects of their processes need optimization, and how to practically achieve near perfection and virtually 100% reliability.

Despite this fact, there is a minor controversy and some purely academic debate about the what a Six Sigma process actually is, and if some statistical assumptions that lie at its core actually have merit.

But let’s cover the basics before we can examine the discussion and controversy.

What is the 1.5 Sigma Shift

As you might know, Six Sigma is a methodology that was developed by Motorola in an attempt to reduce defects to such a low value that they virtually become non-existent. When the company first started evaluating and optimizing processes, they soon realized that process deteriorate or shift in performance over longer periods of time. That’s why short-term data can’t really be considered fully accurate or fully predictive for the way the process will work in the long-term. There are just too many factors that subtly change with time that are impossible to control or monitor correctly.

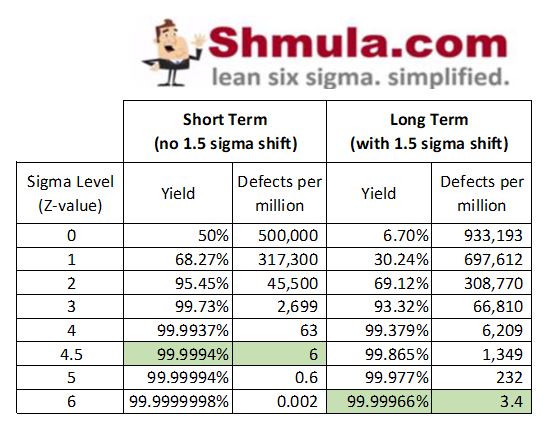

After years of data collection and empirical analysis, Motorola came to the conclusion (mentioned in Six Sigma by Mikel Harry and Richard Schroeder) that the mean of the standard distribution usually drifts with about 1.5 sigma, and since this turned out to be a reliable occurrence, they incorporated this shift into their Six Sigma methodology. That’s why nowadays there are actually two values that one could look at while assigning a sigma value to a process Short Term Dynamic Mean Variation and Long Term Dynamic Mean Variation. While a process can fit the standard statistical criteria to be a Six Sigma process in the short term, the Motorola methodology applies a 1.5 sigma shift, which allows the process to meet just the criteria for 4.5 sigma in the long-term to be called a Six Sigma process.

As you can see in the table below, the short term sigma level of 4.5 is the same as the long term 6 sigma level.

Why is the 1.5 Sigma Shift Useful

The reasoning behind implementing such a shift while looking at processes in the long-term is based on the idea that an organization does not want to expend unreasonable amounts of time, effort and resources trying to optimize something that is practically out of their control. Natural process deterioration or drift occurs because of a host of factors that are outside the scope of the methodology, so adjusting the process expectations and guidelines to fit reality is the most productive approach.

Additionally, it’s important to notice that 1.5 is a value that Motorola arrived to based on their own empirical research, but it doesn’t have to apply to all processes. Qualified professionals can use data available about a specific process and assign a different sigma value to the shift between short-term and long-term expectations that is adequate for the particular situation in question. This makes Six Sigma more flexible, and allows it to adapt to all kinds of organizations.

The So-Called Controversy

Let’s be clear, there isn’t a real controversy on the matter and this is more of an academic discussion and bit of prudishness about the name of the methodology. Statistically speaking, a true Six Sigma process should produce only 2 defects in a billion units, while any process that achieves less than 3.4 defects per a million units is referred to as a Six Sigma process in the context of the Six Sigma methodology. As we’ve mentioned, it actually corresponds to 4.5 sigma in purely statistical terms. Of course, this is not an actual discrepancy, but a feature that manages expectations according to reality and makes a process more robust in the long-term.

What do you think about the 1.5 sigma shift? Do you like it and find it useful, or should the Six Sigma community move away from it to reduce confusion? Add your comments below…

If you’d like to learn more about the Six Sigma methodology, check out our video “What is Six Sigma” >>>

“this is not an actual discrepancy, but a feature that manages expectations according to reality and makes a process more robust in the long-term.” – how is this any better than calculating the sigma level using 0.5 sigma, 3 sigma, or 10 sigma shift? Furthermore, if you actually expect the process to perform as a 4.5 sigma one, why report it as 6 sigma in the first place, why not report it as 4.5 sigma? I’ve made a lengthier argument against sigma shift as a concept here: https://www.gigacalculator.com/articles/what-is-six-sigma-process-control-and-why-most-get-it-wrong-1-5-sigma-shift/

Your table has a typo error.

At 4.5 Sigma Level (Short-term), the DDPMO is 6.8 (not 3.4) as shown.

Refer

http://www.sixsigmadigest.com/support-files/DPMO-Sigma-Table.pdf

Thank you for catching the error. We have fixed the typo.